The Science of Detection: How Algorithms Decode the Hidden Patterns of Machine Writing

To many, AI detection feels like magic or a "black box" that arbitrarily assigns percentages to a piece of text. However, the reality is grounded in deep linguistic mathematics and statistical modeling. As LLMs become more integrated into our lives, understanding the "how" behind detection technology is crucial for developers, educators, and content strategists. At its core, an ai detector is a sophisticated pattern-matching engine that looks for the statistical "fingerprints" left behind by machines that don't have a conscious mind.

The Two Pillars: Perplexity and Burstiness

Almost all modern detection models rely on two primary metrics to distinguish between human and machine:

Perplexity (The Measure of Randomness):

Think of perplexity as a measure of how "surprised" a language model is by a sequence of words. AI models are trained to minimize perplexity; they want to be as clear and predictable as possible. Humans, however, are naturally chaotic. We use rare word pairings, cultural slang, and non-linear logic. If a text has very low perplexity, it means the next word was always the most statistically likely one— a hallmark of AI.Burstiness (The Measure of Variation):

Human writing "bursts." We might have a paragraph with a long, rhythmic sentence, followed by a short, punchy fragment. Our sentence structures are inconsistent because our thoughts are inconsistent. AI, being an optimization engine, tends to produce "smooth" text where sentence length and structure are relatively uniform. Detection algorithms calculate the variance in these structures; low variance is a red flag for automation.

Neural Networks vs. Neural Networks

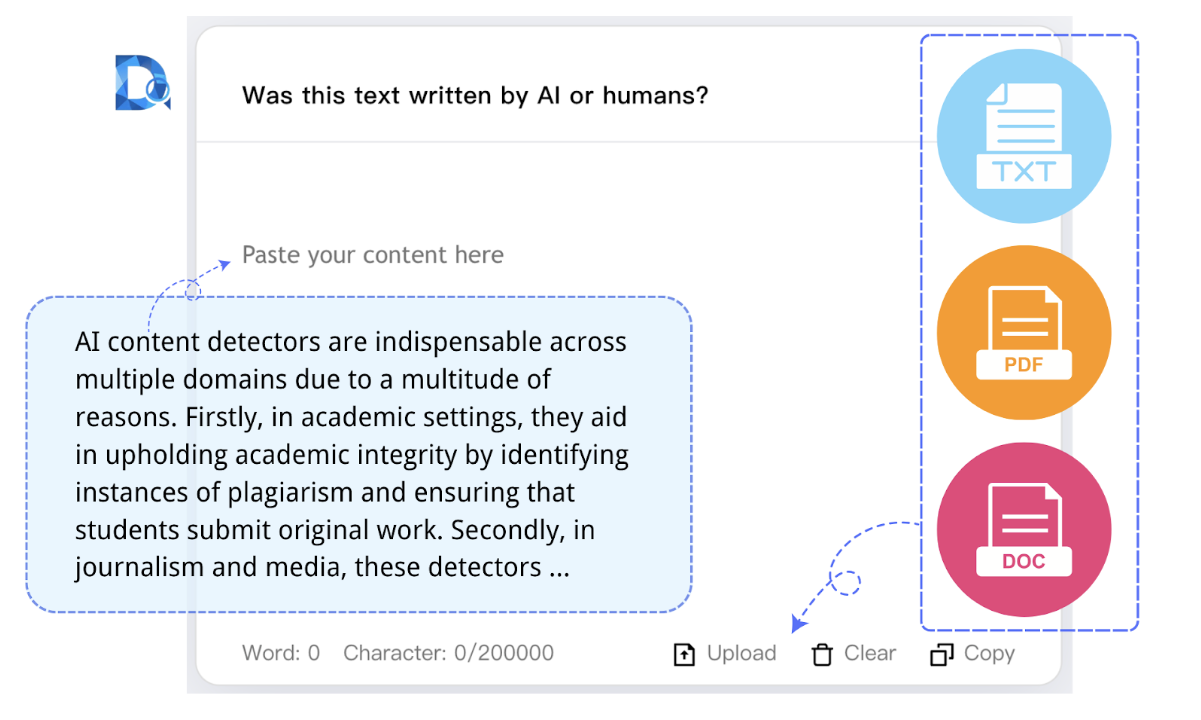

The most advanced detectors, like Decopy, don't just use simple math; they use "classifiers"—other AI models trained specifically to recognize the output of LLMs like GPT-4 or Claude. This is essentially a "detective AI" being trained on millions of examples of "criminal AI" output.

These classifiers look for microscopic patterns that a human eye would never catch. For example, certain LLMs have a "preference" for specific transitional phrases or a subtle tendency to avoid certain types of punctuation. The detector analyzes the "latent space" of the text—the underlying mathematical relationships between words—to see if they match the known weights and biases of popular generative models.

The Challenge of "Adversarial" AI

As detection technology improves, so do the methods used to bypass it. There is currently an "arms race" between those creating "humanizing" tools (which intentionally add typos or synonyms to lower perplexity) and detection platforms that are learning to see through these tricks.

This is why "static" detectors no longer work. A modern system must be "dynamic," constantly updating its training data to include the latest versions of LLMs. It must be able to recognize when a text has been "spun" or "humanized" through automated means. This level of depth is what separates a professional tool from a simple keyword-matching script.

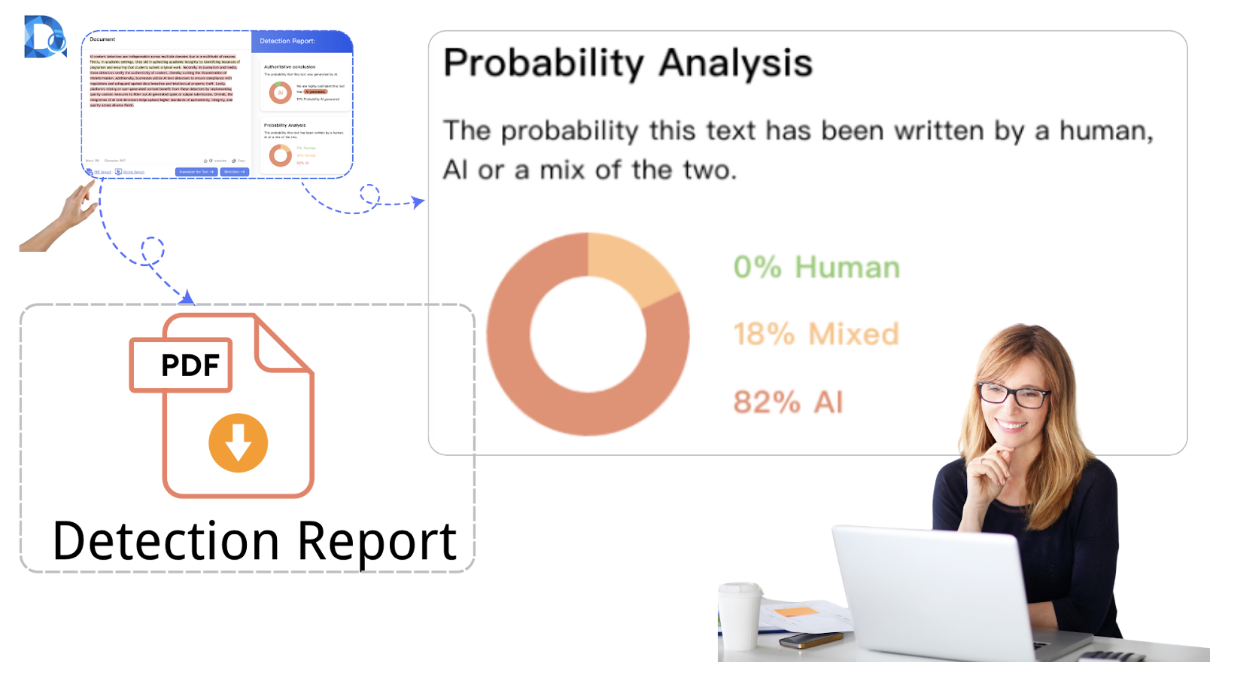

Why 100% Accuracy is a Myth (and Why Probability Matters)

It is important to be honest: no detector can be 100% accurate 100% of the time. Writing is subjective. A human who is tired or writing in a non-native language might produce text that sounds "robotic." Conversely, a very clever prompt might produce AI text that sounds remarkably human.

This is why the best tools provide a "Probability Score" rather than a definitive "Yes/No." It is about risk management. For a Google search ranking, a "high probability" of AI is a risk factor that needs to be mitigated. For an educator, it is a reason to look closer. The tool provides the data; the human provides the judgment.

The Role of Semantics and Context

The next frontier of detection is "Semantic Consistency." Humans generally have a "point" they are trying to make, and they stay on track with a specific nuance. AI can sometimes "drift," sounding perfect sentence-by-sentence but failing to hold a coherent, deep-level argument over 2,000 words.

By analyzing the "thematic glue" of a piece, high-end tools can see if the logic holds up to human standards. This is where the true value lies for businesses. It isn't just about "catching a bot"; it's about ensuring that the content is structurally sound and intellectually honest.

AI detector

Verification as the New Digital Standard

As we move toward a future where 90% of online content may be AI-generated, the ability to verify the "origin of thought" will become as important as verifying a digital signature. We are entering an era of "Zero Trust" content.

Utilizing a high-precision ai content detector is the only way to navigate this landscape with confidence. It allows developers to build better models, marketers to protect their SEO, and readers to know that the advice they are following actually comes from a person with lived experience. In the end, the science of detection is the science of protecting human truth in a world of infinite, synthetic echoes.